I’ve been eying Runway ML since I took Gene Kogan’s Style Transfer workshop a couple years ago. Fortunately, Yining Shi’s class on Synthetic Media is based on Runway ML and gave me a chance to futz around in Runway and see what it’s capable of.

Eventually, I’d like to learn what I could do with font data, like Nan’s project on Machine Learning Font.

So far, my time with Runway has been figuring out the interface and what it’s capable of.

Some, like the Cartoon GAN, is like a highly stylized Style Transfer:

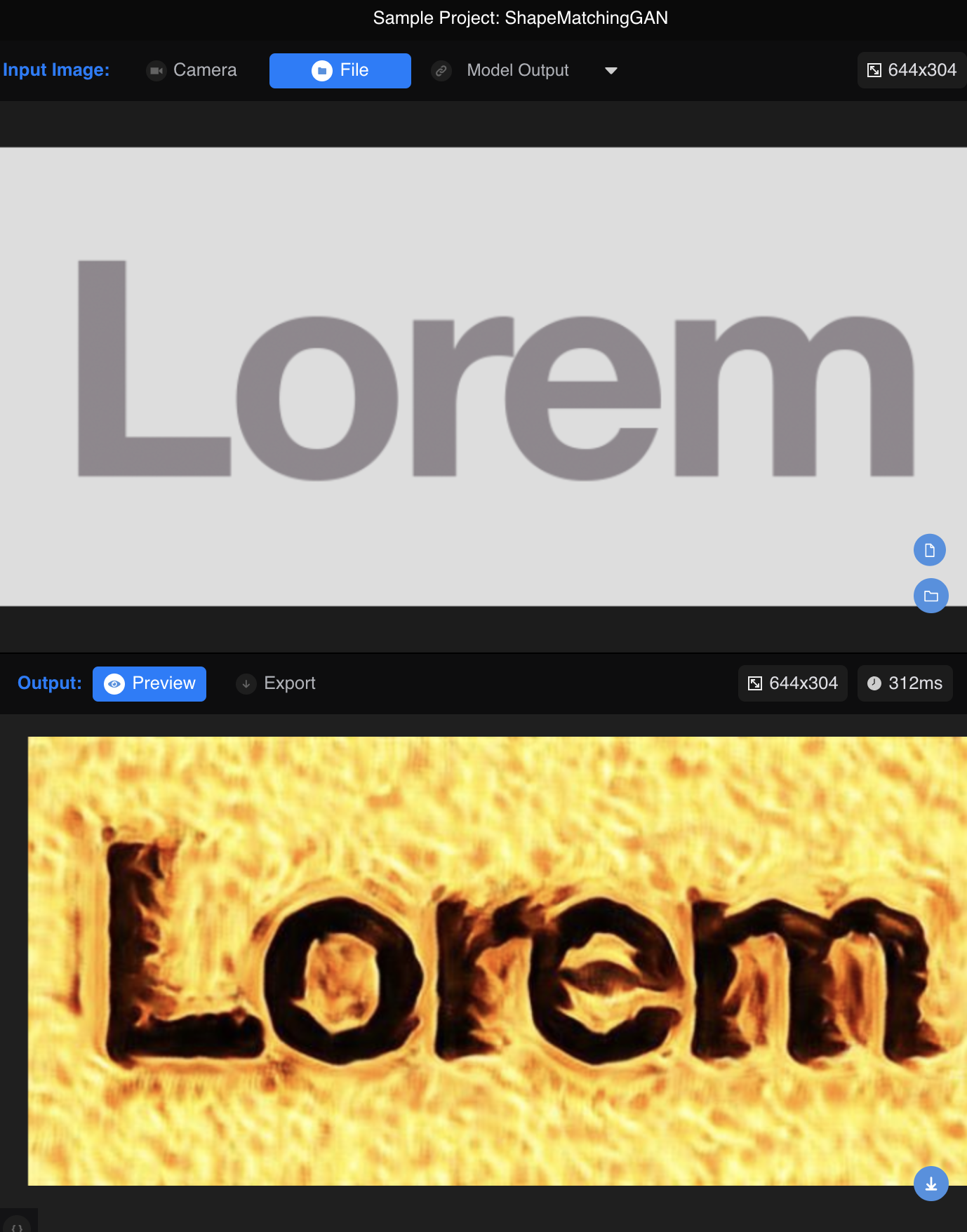

Others do similar things, but with other content (like letters via the Shape Matching GAN)

Here’s one on Text to image generation, via AttnGAN. It generates text descriptions of scenes into images.

Still, others like the Ukiyo GAN I had a hard time figuring out what to do with. It gave me a vector and its options, but I wasn’t quite sure how to go about manipulating it in a way I that was meaningful for me.

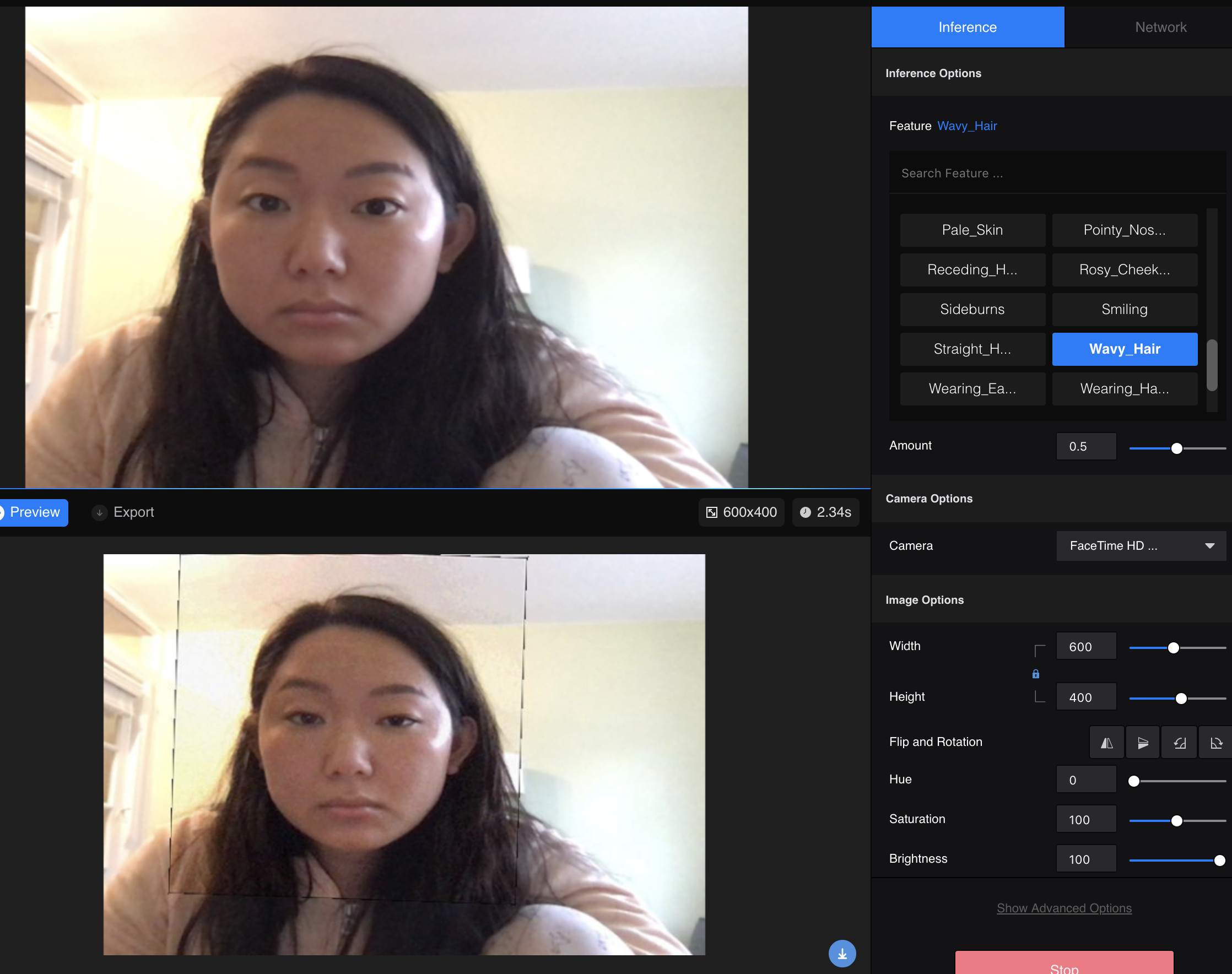

Some models didn’t work at all or only barely, like the GLOW model. I was confused — was it because of my surroundings and background? My face wasn’t properly being recognized?

Thoughts: I would like to be able to create something meaningful that goes beyond the superficial.

I had a hard time making something that had meaning to me, and I think that’s where my creativity was blocked. It always felt like I was stealing someone else’s output, and it didn’t feel like it was ‘mine’ on an intellectual level. Most of the existing models are very specific in their output (e.g. Ukiyo), and I think training my own model would go a long way since the subject/output isn’t too flexible per model.